time-series + classification

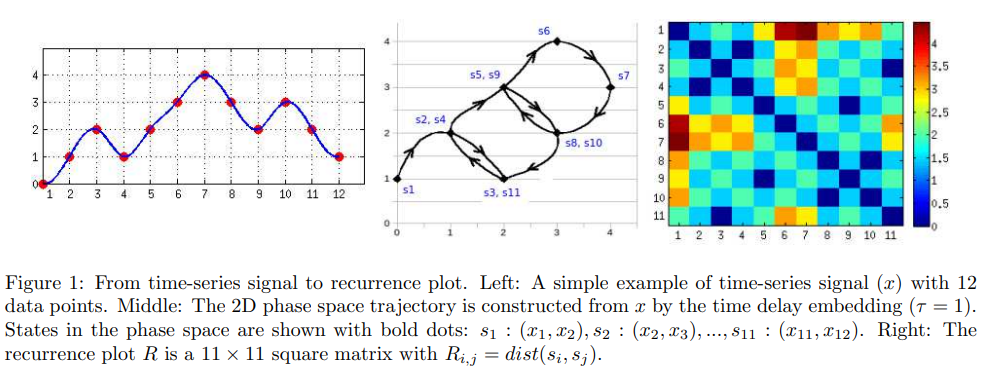

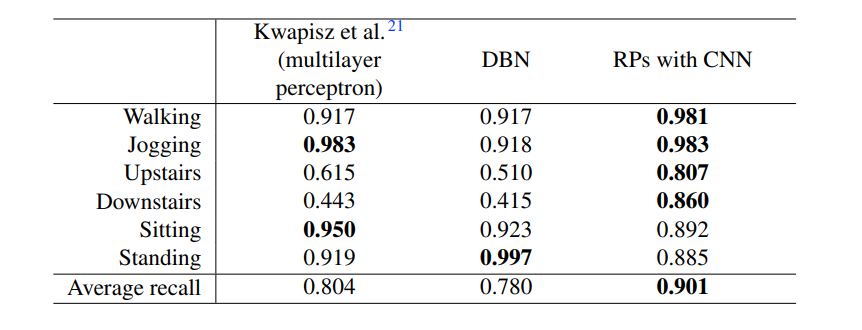

recurrence plot and CNN for time-series classification

It is helpful to think of this architecture as defining two sub-models: the CNN Model for feature extraction and the LSTM Model for interpreting the features across time steps.

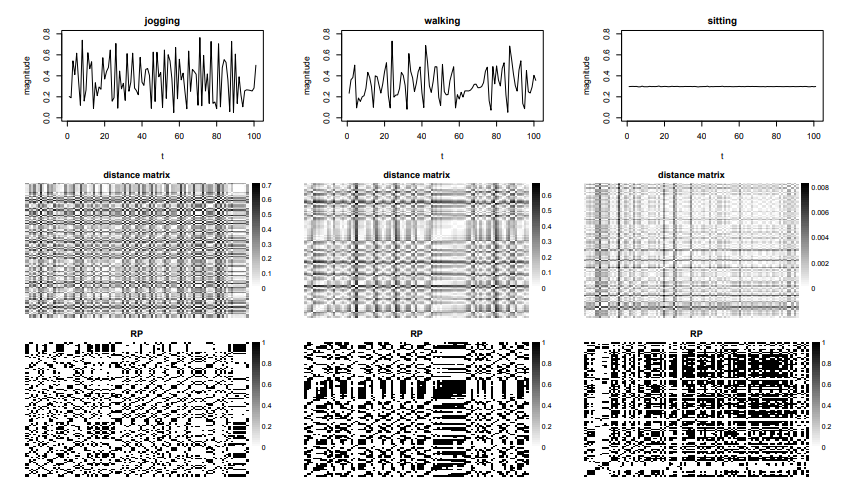

time-series 데이터를 2차원 그림으로 전환한다.

이 때, recurrence plot을 활용한다. 자연스럽게 2차원 그림이 얻어진다.

그 다음 CNN방법을 활용한다. CNN 방법은 특히, 그림이 의미하는 바를 잘 이해하는 데 아주 유용한 방법이다.

recurrence plot정의를 넘어서 cross recurrence plot의 정의도 가능하다.

import numpy as np

from scipy.spatial.distance import pdist, squareform

def rec_plot(s, eps=0.1, steps=10):

d = pdist(s[:,None])

d = np.floor(d/eps)

d[d>steps] = steps

Z = squareform(d)

return Z

def recurrence_plot(s, eps=None, steps=None):

if eps==None: eps=0.1

if steps==None: steps=10

d = sk.metrics.pairwise.pairwise_distances(s)

d = np.floor(d / eps)

d[d > steps] = steps

#Z = squareform(d)

return d

model = Sequential()

model.add(Convolution2D(32, (3, 3), activation='relu', input_shape=(1,32,32), data_format='channels_first'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Convolution2D(32, (3, 3),activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(64, activation='relu'))

#model.add(LeakyReLU(alpha=0.03))

model.add(Dropout(0.5))

model.add(Dense(3, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

#reshape to include depth

X_train = x_train.reshape(x_train.shape[0], 1, 32,32)

#convert to float32 and normalize to [0,1]

X_train = X_train.astype('float32')

X_train /= np.amax(X_train)

# convert labels to class matrix, one-hot-encoding

Y_train = np_utils.to_categorical(y_train, 3)

# split in train and test set

X_train, x_test, Y_train, y_test = train_test_split(X_train, Y_train, test_size=0.1)

model.fit(X_train, Y_train, epochs=200, batch_size=16,shuffle=True)

predictions= model.predict(x_test)

rounded = [np.argmax(x) for x in predictions]

print(K.eval(metrics.categorical_accuracy(y_test, np_utils.to_categorical(rounded, 3))))

---------------------------------------------------------------------------------------------------------------------

# lstm autoencoder recreate sequence

from numpy import array

from keras.models import Sequential

from keras.models import Model

from keras.layers import LSTM

from keras.layers import Dense

from keras.layers import RepeatVector

from keras.layers import TimeDistributed

from keras.utils import plot_model

# define input sequence

sequence = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

# reshape input into [samples, timesteps, features]

n_in = len(sequence)

sequence = sequence.reshape((1, n_in, 1))

# define model

model = Sequential()

model.add(LSTM(100, activation='relu', input_shape=(n_in,1)))

model.add(RepeatVector(n_in))

model.add(LSTM(100, activation='relu', return_sequences=True))

model.add(TimeDistributed(Dense(1)))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(sequence, sequence, epochs=300, verbose=0)

# connect the encoder LSTM as the output layer

model = Model(inputs=model.inputs, outputs=model.layers[0].output)

plot_model(model, show_shapes=True, to_file='lstm_encoder.png')

# get the feature vector for the input sequence

yhat = model.predict(sequence)

print(yhat.shape)

print(yhat)

---------------------------------------------------------------------------------------------------------------------

model = Sequential()

model.add(LSTM(32, input_shape=(10, 2)))

model.add(Dense(1))

---------------------------------------------------------------------------------------------------------------------

from numpy import array

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import TimeDistributed

from keras.layers import LSTM

# prepare sequence

length = 5

seq = array([i/float(length) for i in range(length)])

X = seq.reshape(1, length, 1)

y = seq.reshape(1, length, 1)

# define LSTM configuration

n_neurons = length

n_batch = 1

n_epoch = 1000

# create LSTM

model = Sequential()

model.add(LSTM(n_neurons, input_shape=(length, 1), return_sequences=True))

model.add(TimeDistributed(Dense(1)))

model.compile(loss='mean_squared_error', optimizer='adam')

print(model.summary())

# train LSTM

model.fit(X, y, epochs=n_epoch, batch_size=n_batch, verbose=2)

# evaluate

result = model.predict(X, batch_size=n_batch, verbose=0)

for value in result[0,:,0]:

print('%.1f' % value)

---------------------------------------------------------------------------------------------------------------------

# lstm model

from numpy import mean

from numpy import std

from numpy import dstack

from pandas import read_csv

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers import Dropout

from keras.layers import LSTM

from keras.utils import to_categorical

from matplotlib import pyplot

# load a single file as a numpy array

def load_file(filepath):

dataframe = read_csv(filepath, header=None, delim_whitespace=True)

return dataframe.values

# load a list of files and return as a 3d numpy array

def load_group(filenames, prefix=''):

loaded = list()

for name in filenames:

data = load_file(prefix + name)

loaded.append(data)

# stack group so that features are the 3rd dimension

loaded = dstack(loaded)

return loaded

# load a dataset group, such as train or test

def load_dataset_group(group, prefix=''):

filepath = prefix + group + '/Inertial Signals/'

# load all 9 files as a single array

filenames = list()

# total acceleration

filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt']

# body acceleration

filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt']

# body gyroscope

filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt']

# load input data

X = load_group(filenames, filepath)

# load class output

y = load_file(prefix + group + '/y_'+group+'.txt')

return X, y

# load the dataset, returns train and test X and y elements

def load_dataset(prefix=''):

# load all train

trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/')

print(trainX.shape, trainy.shape)

# load all test

testX, testy = load_dataset_group('test', prefix + 'HARDataset/')

print(testX.shape, testy.shape)

# zero-offset class values

trainy = trainy - 1

testy = testy - 1

# one hot encode y

trainy = to_categorical(trainy)

testy = to_categorical(testy)

print(trainX.shape, trainy.shape, testX.shape, testy.shape)

return trainX, trainy, testX, testy

# fit and evaluate a model

def evaluate_model(trainX, trainy, testX, testy):

verbose, epochs, batch_size = 0, 15, 64

n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1]

model = Sequential()

model.add(LSTM(100, input_shape=(n_timesteps,n_features)))

model.add(Dropout(0.5))

model.add(Dense(100, activation='relu'))

model.add(Dense(n_outputs, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# fit network

model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose)

# evaluate model

_, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0)

return accuracy

# summarize scores

def summarize_results(scores):

print(scores)

m, s = mean(scores), std(scores)

print('Accuracy: %.3f%% (+/-%.3f)' % (m, s))

# run an experiment

def run_experiment(repeats=10):

# load data

trainX, trainy, testX, testy = load_dataset()

# repeat experiment

scores = list()

for r in range(repeats):

score = evaluate_model(trainX, trainy, testX, testy)

score = score * 100.0

print('>#%d: %.3f' % (r+1, score))

scores.append(score)

# summarize results

summarize_results(scores)

# run the experiment

run_experiment()

---------------------------------------------------------------------------------------------------------------------

# cnn lstm model

from numpy import mean

from numpy import std

from numpy import dstack

from pandas import read_csv

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers import Dropout

from keras.layers import LSTM

from keras.layers import TimeDistributed

from keras.layers.convolutional import Conv1D

from keras.layers.convolutional import MaxPooling1D

from keras.utils import to_categorical

from matplotlib import pyplot

# load a single file as a numpy array

def load_file(filepath):

dataframe = read_csv(filepath, header=None, delim_whitespace=True)

return dataframe.values

# load a list of files and return as a 3d numpy array

def load_group(filenames, prefix=''):

loaded = list()

for name in filenames:

data = load_file(prefix + name)

loaded.append(data)

# stack group so that features are the 3rd dimension

loaded = dstack(loaded)

return loaded

# load a dataset group, such as train or test

def load_dataset_group(group, prefix=''):

filepath = prefix + group + '/Inertial Signals/'

# load all 9 files as a single array

filenames = list()

# total acceleration

filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt']

# body acceleration

filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt']

# body gyroscope

filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt']

# load input data

X = load_group(filenames, filepath)

# load class output

y = load_file(prefix + group + '/y_'+group+'.txt')

return X, y

# load the dataset, returns train and test X and y elements

def load_dataset(prefix=''):

# load all train

trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/')

print(trainX.shape, trainy.shape)

# load all test

testX, testy = load_dataset_group('test', prefix + 'HARDataset/')

print(testX.shape, testy.shape)

# zero-offset class values

trainy = trainy - 1

testy = testy - 1

# one hot encode y

trainy = to_categorical(trainy)

testy = to_categorical(testy)

print(trainX.shape, trainy.shape, testX.shape, testy.shape)

return trainX, trainy, testX, testy

# fit and evaluate a model

def evaluate_model(trainX, trainy, testX, testy):

# define model

verbose, epochs, batch_size = 0, 25, 64

n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1]

# reshape data into time steps of sub-sequences

n_steps, n_length = 4, 32

trainX = trainX.reshape((trainX.shape[0], n_steps, n_length, n_features))

testX = testX.reshape((testX.shape[0], n_steps, n_length, n_features))

# define model

model = Sequential()

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'), input_shape=(None,n_length,n_features)))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu')))

model.add(TimeDistributed(Dropout(0.5)))

model.add(TimeDistributed(MaxPooling1D(pool_size=2)))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(100))

model.add(Dropout(0.5))

model.add(Dense(100, activation='relu'))

model.add(Dense(n_outputs, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# fit network

model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose)

# evaluate model

_, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0)

return accuracy

# summarize scores

def summarize_results(scores):

print(scores)

m, s = mean(scores), std(scores)

print('Accuracy: %.3f%% (+/-%.3f)' % (m, s))

# run an experiment

def run_experiment(repeats=10):

# load data

trainX, trainy, testX, testy = load_dataset()

# repeat experiment

scores = list()

for r in range(repeats):

score = evaluate_model(trainX, trainy, testX, testy)

score = score * 100.0

print('>#%d: %.3f' % (r+1, score))

scores.append(score)

# summarize results

summarize_results(scores)

# run the experiment

run_experiment()

---------------------------------------------------------------------------------------------------------------------

# univariate lstm example

from numpy import array

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# split a univariate sequence into samples

def split_sequence(sequence, n_steps):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

# check if we are beyond the sequence

if end_ix > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

raw_seq = [10, 20, 30, 40, 50, 60, 70, 80, 90]

# choose a number of time steps

n_steps = 3

# split into samples

X, y = split_sequence(raw_seq, n_steps)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X = X.reshape((X.shape[0], X.shape[1], n_features))

# define model

model = Sequential()

model.add(LSTM(50, activation='relu', input_shape=(n_steps, n_features)))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=200, verbose=0)

# demonstrate prediction

x_input = array([70, 80, 90])

x_input = x_input.reshape((1, n_steps, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

# univariate stacked lstm example

from numpy import array

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# split a univariate sequence

def split_sequence(sequence, n_steps):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

# check if we are beyond the sequence

if end_ix > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

raw_seq = [10, 20, 30, 40, 50, 60, 70, 80, 90]

# choose a number of time steps

n_steps = 3

# split into samples

X, y = split_sequence(raw_seq, n_steps)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X = X.reshape((X.shape[0], X.shape[1], n_features))

# define model

model = Sequential()

model.add(LSTM(50, activation='relu', return_sequences=True, input_shape=(n_steps, n_features)))

model.add(LSTM(50, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=200, verbose=0)

# demonstrate prediction

x_input = array([70, 80, 90])

x_input = x_input.reshape((1, n_steps, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

---------------------------------------------------------------------------------------------------------------------

# multivariate cnn example

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers.convolutional import Conv1D

from keras.layers.convolutional import MaxPooling1D

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps

# check if we are beyond the dataset

if end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix, :-1], sequences[end_ix-1, -1]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

# choose a number of time steps

n_steps = 3

# convert into input/output

X, y = split_sequences(dataset, n_steps)

# the dataset knows the number of features, e.g. 2

n_features = X.shape[2]

# define model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=2, activation='relu', input_shape=(n_steps, n_features)))

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(50, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=1000, verbose=0)

# demonstrate prediction

x_input = array([[80, 85], [90, 95], [100, 105]])

x_input = x_input.reshape((1, n_steps, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

---------------------------------------------------------------------------------------------------------------------

# univariate stacked lstm example

from numpy import array

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# split a univariate sequence

def split_sequence(sequence, n_steps):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

# check if we are beyond the sequence

if end_ix > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

raw_seq = [10, 20, 30, 40, 50, 60, 70, 80, 90]

# choose a number of time steps

n_steps = 3

# split into samples

X, y = split_sequence(raw_seq, n_steps)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X = X.reshape((X.shape[0], X.shape[1], n_features))

# define model

model = Sequential()

model.add(LSTM(50, activation='relu', return_sequences=True, input_shape=(n_steps, n_features)))

model.add(LSTM(50, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=200, verbose=0)

# demonstrate prediction

x_input = array([70, 80, 90])

x_input = x_input.reshape((1, n_steps, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

---------------------------------------------------------------------------------------------------------------------

# multi-headed cnn model

from numpy import mean

from numpy import std

from numpy import dstack

from pandas import read_csv

from matplotlib import pyplot

from keras.utils import to_categorical

from keras.utils.vis_utils import plot_model

from keras.models import Model

from keras.layers import Input

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers import Dropout

from keras.layers.convolutional import Conv1D

from keras.layers.convolutional import MaxPooling1D

from keras.layers.merge import concatenate

# load a single file as a numpy array

def load_file(filepath):

dataframe = read_csv(filepath, header=None, delim_whitespace=True)

return dataframe.values

# load a list of files and return as a 3d numpy array

def load_group(filenames, prefix=''):

loaded = list()

for name in filenames:

data = load_file(prefix + name)

loaded.append(data)

# stack group so that features are the 3rd dimension

loaded = dstack(loaded)

return loaded

# load a dataset group, such as train or test

def load_dataset_group(group, prefix=''):

filepath = prefix + group + '/Inertial Signals/'

# load all 9 files as a single array

filenames = list()

# total acceleration

filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt']

# body acceleration

filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt']

# body gyroscope

filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt']

# load input data

X = load_group(filenames, filepath)

# load class output

y = load_file(prefix + group + '/y_'+group+'.txt')

return X, y

# load the dataset, returns train and test X and y elements

def load_dataset(prefix=''):

# load all train

trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/')

print(trainX.shape, trainy.shape)

# load all test

testX, testy = load_dataset_group('test', prefix + 'HARDataset/')

print(testX.shape, testy.shape)

# zero-offset class values

trainy = trainy - 1

testy = testy - 1

# one hot encode y

trainy = to_categorical(trainy)

testy = to_categorical(testy)

print(trainX.shape, trainy.shape, testX.shape, testy.shape)

return trainX, trainy, testX, testy

# fit and evaluate a model

def evaluate_model(trainX, trainy, testX, testy):

verbose, epochs, batch_size = 0, 10, 32

n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1]

# head 1

inputs1 = Input(shape=(n_timesteps,n_features))

conv1 = Conv1D(filters=64, kernel_size=3, activation='relu')(inputs1)

drop1 = Dropout(0.5)(conv1)

pool1 = MaxPooling1D(pool_size=2)(drop1)

flat1 = Flatten()(pool1)

# head 2

inputs2 = Input(shape=(n_timesteps,n_features))

conv2 = Conv1D(filters=64, kernel_size=5, activation='relu')(inputs2)

drop2 = Dropout(0.5)(conv2)

pool2 = MaxPooling1D(pool_size=2)(drop2)

flat2 = Flatten()(pool2)

# head 3

inputs3 = Input(shape=(n_timesteps,n_features))

conv3 = Conv1D(filters=64, kernel_size=11, activation='relu')(inputs3)

drop3 = Dropout(0.5)(conv3)

pool3 = MaxPooling1D(pool_size=2)(drop3)

flat3 = Flatten()(pool3)

# merge

merged = concatenate([flat1, flat2, flat3])

# interpretation

dense1 = Dense(100, activation='relu')(merged)

outputs = Dense(n_outputs, activation='softmax')(dense1)

model = Model(inputs=[inputs1, inputs2, inputs3], outputs=outputs)

# save a plot of the model

plot_model(model, show_shapes=True, to_file='multichannel.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# fit network

model.fit([trainX,trainX,trainX], trainy, epochs=epochs, batch_size=batch_size, verbose=verbose)

# evaluate model

_, accuracy = model.evaluate([testX,testX,testX], testy, batch_size=batch_size, verbose=0)

return accuracy

# summarize scores

def summarize_results(scores):

print(scores)

m, s = mean(scores), std(scores)

print('Accuracy: %.3f%% (+/-%.3f)' % (m, s))

# run an experiment

def run_experiment(repeats=10):

# load data

trainX, trainy, testX, testy = load_dataset()

# repeat experiment

scores = list()

for r in range(repeats):

score = evaluate_model(trainX, trainy, testX, testy)

score = score * 100.0

print('>#%d: %.3f' % (r+1, score))

scores.append(score)

# summarize results

summarize_results(scores)

# run the experiment

run_experiment()

---------------------------------------------------------------------------------------------------------------------

import numpy as np

def r_plot(data,delay=1):

transformed=np.zeros([2,len(data)-delay])

transformed[0,:]=data[0:len(data)-delay]

transformed[1,:]=data[delay:len(data)]

rp=np.zeros([len(data)-delay,len(data)-delay])

for i in range(len(rp)):

for j in range(len(rp)):

rp[i,j]=np.linalg.norm(transformed[:,i]-transformed[:,j])

return rp

def cr_plot(data1,data2,delay=1):

transformed=np.zeros([2,len(data1)-delay])

transformed[0,:]=data1[0:len(data1)-delay]

transformed[1,:]=data2[delay:len(data2)]

crp=np.zeros([len(data1)-delay,len(data2)-delay])

for i in range(len(crp)):

for j in range(len(crp)):

crp[i,j]=np.linalg.norm(transformed[:,i]-transformed[:,j])

return crp

def threshold(mat,thresh=0.2):

mini=mat.min()

maxi=mat.max()

trans=(mat-mini)/(maxi-mini)

for i in range(len(trans)):

for j in range(len(trans)):

if trans[i,j]> thresh:

trans[i,j]=1

else:

trans[i,j]=0

return trans

---------------------------------------------------------------------------------------------------------------------